Several years ago, when you couldn’t open LinkedIn or a business publication without the term “digital transformation” as the lede, I began a passion project to test a hypothesis bouncing around in my head.

“Was all the excitement around the transformative ability of this technology real and demonstrable?”

“Could human factors, like leadership and organizational culture, be an under-rated or under-utilized contributor to the success – or failure – of these initiatives?”

Spoiler alert – after 60+ interviews across geographies and industries, the answers were typically “mmm…mostly” to question 1 and “yes, absolutely” to question 2.

The “mostly” opinion typically came from organizations and pundits who acknowledged that the habit of digitizing a bad or ill-conceived analog process wasn’t transformative, it was just turning frustrating paper processes into shiny, and still frustrating, pixels. True transformation was frigging hard and the tech wasn’t a silver bullet.

The “yes absolutely” perspectives talked about transformations powered by leaders willing to listen and learn versus dictate, cultures of agency, transparency and accountability, change management done with the employees versus to them. An organizational willingness to experiment, pilot, establish clear guardrails but accept, not punish, inevitable mistakes and failures.

For many, there’s a sense that Digital Transformation was a unkept promise.

A massively expensive, significantly disruptive effort that didn’t ultimately deliver on its much-hyped ROI and ended up tinkering with industries, not transforming them.

Watching the current AI tsunami engulf my feed, I’m left with an unmistakable feeling of déjà vu.

That, yet again, we’re placing our faith and our futures in a mesmerizing shiny technology and failing to consider or actively involve the critical humans – our leaders and our cultures – in the transformations we seek.

I also fear organizations are poised to fall into a number of avoidable traps if the prevailing “move quickly and break things” ethos continues.

The throes of Efficiency Theatre

Almost daily headlines of employee layoffs, from Amazon to Accenture, typically couched in terms of accelerating AI adoption and creating new “efficiencies” across the organization. For most organizations, those efficiencies have yet to translate to growth, increased productivity or sustained gains in stock value. While headcount reductions are a classic sign of a technology early in its adoption, we need to push harder on how AI is growing productivity and sustaining growth if we want to label it transformative. If your touted gains in NPS or NPD aren’t demonstrable in two quarters, you’re in theatre not transformation.

Governance as a by-product

The dizzying frequency of AI product launches and enhancements can be overwhelming but what can’t be overstated is the need for more prevailing governance. Not as a handbrake to progress but governance as a confirmation that the potential of this technology isn’t running unfettered. Externally that means real C-suite action on AI ethics, age-gating, addressing recurring LLM biases, and tackling accelerating cybersecurity risk profiles. Internally that means clear instructions, training and universally understood guardrails for employees. For all the rapid advances toward Agentic, I’m confident most reasonable consumers want to see more robust guardrails on this tech before they let some bot cruise the internet with their credit card and health information.

The risks of vendor consolidation

Then there’s the consolidation of the market into a handful of companies that are driving the only real energy in the S&P. A fact which should be alarming to those of us who watched the bubble bursting of 1999 and 2008 crater organizations and bankrupt livelihoods globally. While it’s interesting to watch the bifurcation between Western, principally US, and Chinese firms on their approach to AI, we should recognize that competition, not consolidation, provides more options for firms and doesn’t lock executives into an ever decreasing set of players.

This is still virgin territory

If we’re brutally honest there is no AI Playbook of “best practises” or library of teachable case studies, and yet there’s an ocean of experts and gurus claiming such expertise. Buyers beware. We’re just moving too fast, and this phenomenon is too new for anyone to credibly say they’ve cracked the code on this. Anyone selling you and your leadership team on their best practices is either dangerously naïve or selling you a reheated version of their Digital Transformation Playbook.

The trap that looms largest for me is the same I witnessed with Digital Transformation.

The leadership and culture ones.

The ones related to our humans, not the technology.

On the leadership part.

I feel your pain. Satisfying a restless Board of Directors while trying to mix some AI magic growth dust into your share price and keeping the baying on your analyst calls at…ummm…bay.

That’s not for the faint of heart.

Some suggestions:

Make the one thing, the 1 thing – what is the most critical business objective you need AI to attack. In conversations with colleagues and threads on LinkedIn, most organizations seem to be deploying AI to cure all their aspirations and challenges in one fell swoop. Pick a lane – growth, customer service, NPD, speed to market – and make that the focus. Interesting to see HBR tell leaders to “prune the pilot programs” – in the same breath as experiment more – but there’s something to be said for deep focus, rather than broad and shallow, in this moment.

Role model like its Fashion Week – not just encouraging words and CEO all-staff videos. Get into the tech yourself, get battered and bruised by it – then show your company what you’ve learned, the mistakes and investments of personal time you’ve made. Show your work. If you want commitment from your people – especially on something they’re already leery and wary about – show them the way.

Be transparent – Again a common practise, rarely practised. If your teams are being evaluated against their AI Agility, tell them. If you expect certain targets or objectives to be met, share the metrics and the timetable. And if you’re still determining how and where AI is going to impact your strategy, your organization and your people be particularly transparent about that. Leaders are already facing a Trust deficit inside their organizations. Transparency isn’t a panacea but with the high emotional load our people are already carrying, it’s certainly a strong way to bolster their flagging morale.

Take no risks with Risk – I hope that recent public comments from Sam Altman and Elon Musk caused your professional impulses to shudder. Their avant-garde proclamations of significant and inevitable AI accidents or mishaps should give you genuine pause. There’s never been a more urgent need to bring your Risk & Governance colleagues into the frame. From explicit company policy on data handling to scenario planning your cybersecurity threat vectors, contemplate how many ways AI could crater your firm with the same enthusiasm you ask how it will boost your productivity. Let the AI tech-bros play fast and loose with their accountabilities, please don’t join them. In short, if you can’t explain why your AI models fail, then you’ve not earned the right to scale it.

On the culture piece.

Think Agency then Agentic – Lots of encouraging talk about “freeing people to do higher order tasks” That’s starts with giving your folks genuine agency (and a few guardrails of course) to experiment, to find and tackle the hidden growth opportunities, to avoid wasteful meetings, to self-organize, to ignore your latent bureaucracy. If AI is going to truly augment your most talented, then take the shackles off. Human agency is more powerful - and available - right now than AI Agents.

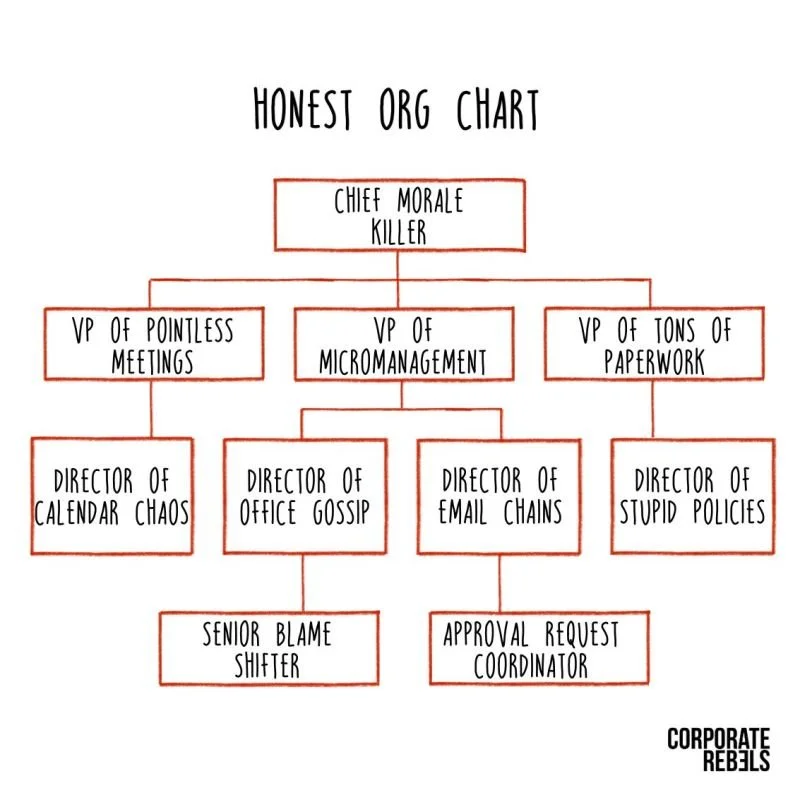

Corporate Rebels have a brilliant sense of irony - and a great perspective on Flat Organizations. Image Credit: Corporate Rebels

Flatter is Faster – The most common lament of organizations remains speed-to-action. Typically, that’s a symptom of bureaucratic bloat. Too many (needless) layers. Too much oversight. It’s great to offload the repetitive and the mundane to the LLMs. Pointless if your people still need to work through a labyrinth of an org chart to get anything approved. While Holacracy, and other modalities, might not be right for your firm, Flatter and self-managed is coming back in vogue.

Networks = Networth – I’m not channeling Tony Robbins but the informal networks inside your firm are the untapped gold mines of this AI era. If you’re not familiar with the great strides in Organizational Network Analysis, you’re missing a significant unlock inside your culture. I’m talking specifically about identifying and activating the 3% of folks who impact 90% of any transformation. Read that last line again, 3% impact 90% of your desired transformation.

Get Emotional – With all the news of AI adoption and layoffs, we’re witnessing proportionate increases in employee burnout, anxiety, fatigue, despondency and “quiet hugging”. That should worry any C-Suite executive no matter their function. We all know that our emotions drive the decisions we make – or avoid – so the astute culture leaders know that understanding and addressing the huge emotional burden our employees are feeling isn’t being soft. It’s tackling the really hard stuff. The hard stuff that directly translates to hard numbers.

That’s my list of traps and opportunities.

I fully acknowledge I’ve likely missed a few. If so, please add the traps and opportunities you’re seeing and that concern you.

Personally, I remain a techno-optimist and believe (hope and pray) that AI will be a great unlock for business and society.

But not if it’s at the expense of our humanity.

Ideally this AI journey will not be Digital Transformation Déjà vu but another equally elegant French phrase preseque vu

Which means “The intense feeling of being on the very brink of a powerful epiphany, insight or revelation”

En avant mes amis, en avant

Relentless change initiatives are leading to an unprecdented rise in employee fatigue. Image created by ChatGPT 5

Further Reading

· Sam Altman chatting about his belief we are still going to have some “strange and scary moments with AI”. The a16z Podcast October 2025.

· Michele Zanini and Gary Hamel wrote the playbook on busting bureaucracy – if you haven’t got a copy of “Humanocracy” then pick up the recently updated and expanded 2nd Edition.

· “Stop Running So Many AI Pilots” – Harvard Business Review November-December 2025

· Organizational Network Analysis – Huge fans of the brilliant folks at Innovisor in Denmark. If you want to get your hands really dirty, this is an excellent practitioners guide on the topic.

· AI Adoption and Culture – Big fan of Paul Gibbons and James Healey’s book “Adopting AI. The People-First Approach”

En avant mes amis, en avant – Onwards my friends onwards